Using EXPLAIN ANALYZE to debug a slow SQL query

As a software developer, performance problems are a “good” problem to have, because it means your product is being used! Here at Reverb, we are lucky to be in a position where fixing and preventing performance issues is of real value. And when it comes to scaling applications, the first culprits to arise are usually poorly optimized SQL queries.

In this post I want to share an example of how we debugged and fixed a slow SQL query. I’ll explore the EXPLAIN ANALYZE tool, and talk about a few things to keep in mind when crafting performant SQL queries. Note that while this example uses PostgreSQL, the ideas apply to all modern SQL databases.

The Query

On Reverb.com, buyers can send messages to sellers to ask questions about their products and negotiate. Like many SAAS products, we have many small-footprint users, and a few very large-footprint users. It was the latter case that brought to light that we had a very slow-running query on our page that shows you a list of your unread conversations, to the tune of 10–20 second page loads 😱.

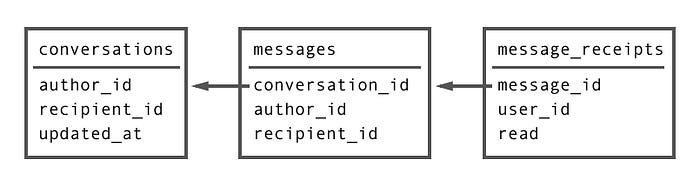

The relevant data model is a pretty straight-forward message thread system, with three tables:

Each conversation can have one or more message, and each message will have a message_receipt for each of the two users involved, indicating whether they have read it yet or not. And finally, the query:

SELECT DISTINCT conversations.*

FROM conversations

INNER JOIN messages

ON messages.conversation_id = conversations.id

INNER JOIN message_receipts

ON message_receipts.message_id = messages.id

WHERE (conversations.author_id = ? OR conversations.recipient_id = ?)

AND message_receipts.read = 'f'

AND message_receipts.user_id = ?

ORDER BY conversations.updated_at DESC

LIMIT 30This returns the latest 30 conversations that have a message you have not read yet.

Debugging with EXPLAIN ANALYZE

Our first step is to visualize what’s happening under the hood with EXPLAIN ANALYZE. Prefixing your query with EXPLAIN ANALYZE will print a query plan that shows you how the database ended up fetching the requested records. Note that you could alternatively just run EXPLAIN, which will not actually run the query, and instead just return the query plan. This is preferable if your query is incredibly slow, or if it writes to the database. Otherwise, adding the ANALYZE is helpful because you will get real execution times for each operation, instead of estimates. Here’s what we get:

The first thing you’ll notice if you look at the bottom is that it returned 21 rows and took a total of about 15s to run. Yep, no good. I find it makes more sense to read these plans from the “inside out”, starting with the most indented portion and working my way out. Here’s the first block we’ll look at:

-> BitmapOr (actual time=4.666..4.666 rows=0 loops=1)

-> Bitmap Index Scan on index_conversations_on_author_id (actual time=2.101..2.101 rows=367 loops=1)

Index Cond: (author_id = ?)

-> Bitmap Index Scan on index_conversations_on_recipient_id (actual time=2.563..2.563 rows=801 loops=1)

Index Cond: (recipient_id = ?)Note that I’ve edited out the (cost=...) sections for sake of brevity. These are the estimated costs (in arbitrary units) that the query plan generates before running the query itself. Since we used ANALYZE, we have actual times (in milliseconds), which are all the more useful. You’ll also see that PostgreSQL shows two values here: the first is the startup cost for the operation, the second is the total cost. Generally the total cost is all we care about.

This block is showing us that it ran two Bitmap Index Scans, and then joined the result together with a BitmapOr. We can match this up to our query filter WHERE (conversations.author_id = ? OR conversations.recipient_id = ?) . So this means we have indexes on both of these fields, which is great since the database doesn’t have to scan over every single conversation to find the ones this user has participated in. In order to get these 367 + 801 = 1,168 conversations, it only took 4.666 ms; not too bad, so let’s continue working outward.

-> Nested Loop (actual time=4.864..6031.241 rows=12720 loops=1)

-> Bitmap Heap Scan [LAST EXAMPLE COLLAPSED]

-> Index Scan using index_messages_on_conversation_id on messages (actual time=0.882..5.135 rows=11 loops=1168)

Index Cond: (conversation_id = conversations.id)Next our database used our index_messages_on_conversation_id index on our messages table, in preparation for the join to our conversations result from the last step. Again, this is good that an appropriate index is being used here. The Nested Loop line simply represents the process for joining these two sets together. We see that this returns 12,720 rows, for a total time of about 6 seconds. Yikes! At this point, we know the query has already gotten out of hand. Let’s move outward one more time though:

-> Nested Loop (actual time=14517.357..14517.714 rows=1 loops=1)

-> Nested Loop [LAST EXAMPLE COLLAPSED]

-> Index Scan using index_message_receipts_on_message_id on message_receipts (actual time=0.665..0.665 rows=0 loops=12720)

Index Cond: (message_id = messages.id)

Filter: ((NOT read) AND (user_id = ?))

Rows Removed by Filter: 2This is very similar to the last step. Yet again we are using an index on message_receipts and running a Nested Loop in order to join the receipts to our last results. This time, we’ve added another 8.5s for a grand total of 14.5s, which accounts for 99% of our entire query time.

Where did we go wrong?

Remember that this is one of our more active sellers. They’ve participated in 1,168 conversations, which in total account for 12,720 messages. This already is an absurd number of rows that the database has to load into memory to execute the first INNER JOIN. Then it must do the same by joining a single message_receipts entry to each row of our intermediate result set. Only once it has executed both of these JOINs can it finally filter by the read value on our receipts (this step can be found within our final index scan: Filter: ((NOT read) AND (user_id = ?)) ).

Any time the database has to read rows from disk, you incur a cost. Doing this tens of thousands of times in a single query leads to an unhappy end-user. And this query will only get worse as time goes on, since we’re looping over their entire history of messages.

So how can we reduce the number of intermediate rows that we load? All we really care about are unread conversations, which most users, large or small, only have a few of at a time. We can therefore imagine that should we reverse the order of the joins, starting with message_receipts, that even for our largest sellers we should only be loading 10s or maybe 100s of rows at a time.

JOIN Order

It would be understandable to think that reversing the order of joins in our query would fix the problem. However, the planner does not read the query like a sequential script; it actually has the flexibility to reorder inner-joins and filters in whichever way it thinks will be most performant. In our case it just happened to order the joins the same as our query. And the way it makes these decisions is by looking at statistics for each table — eg, how many times do certain values appear in a column? By looking at the estimated costs in the query plan, we get a glimpse into how these statistics were informed the resulting query. Here are those lines again for both the initial conversations scan and our join to messages:

Bitmap Heap Scan on conversations (cost=6.49..196.96 rows=79 width=32) (actual time=4.816..15.937 rows=1168 loops=1)Nested Loop (cost=6.92..673.93 rows=342 width=36) (actual time=4.864..6031.241 rows=12720 loops=1)

In both cases, the row estimates are way off! Instead of our actual 12k+ messages, it was guessing we’d end up with about 342 — which may be a reasonable guess given the average number of messages across all users, but for our more active users, this is far from the case.

This begged a couple of questions:

- Can we improve the statistics postgres is using to create its estimates?

The answer is yes — one of the statistics that it’s keeping for each table is a list of the most common values for each column, and the frequency of each one (see documentation). By default it stores 100 values, but it can be configured to be as high as 10,000. This explains why this query ran fine for our very largest users — their user ids appeared in this list, while the user in question happened to fall somewhere beyond those top 100. This can be a useful tuning parameter for certain database sizes and distributions. - Can we enforce a JOIN order?

We found there is a configuration parameterjoin_collapse_limitthat can be used toward this end (see documentation). However, applying this to a single query from within our ORM would be a little messy and in some ways goes against postgres’ philosophy of letting the planner do its job.

While #1 above alone would solve this issue for this user and probably every user (at least given the current state of this table), we realized that there was an even simpler solution. Look back at this condition we’re applying: (conversations.author_id = ? OR conversations.recipient_id = ?) . This is in fact redundant, since we are already scoping to the current user here: message_receipts.user_id = ? . If we simply remove this filter, then the most constricted table will certainly be the message_receipts, and the planner will have to choose it as the initial join table!

A new query

SELECT DISTINCT conversations.*

FROM conversations

INNER JOIN messages

ON messages.conversation_id = conversations.id

INNER JOIN message_receipts

ON message_receipts.message_id = messages.id

WHERE message_receipts.read = 'f'

AND message_receipts.user_id = ?

ORDER BY conversations.updated_at DESC

LIMIT 30This is the same query as before, but without that filter on conversations. Here’s our EXPLAIN ANALYZE for this query:

Our most inner Nested Loop uses indexes on both our message_receipts and messages table to join them together, returning a total of 328 rows — much smaller. The next loop joins a conversation for each message, which since it’s 1-to-1, doesn’t change our row count. By removing the one line, we’ve brought the query to under a millisecond!

Conclusions

Our takeaway from this is that when you have a table with a highly irregular distribution of data, as is often the case when it has a foreign key to users, the query plan and the resulting performance can very wildly from one user to another. And surprisingly, it’s not just a difference between small users and large users; based on the statistics stored for the “most common values” of that foreign key column, assuming default settings, the 100th most-common user could get a very different query than the 101st!

Tuning these queries will always require a bit of experimentation, but we can use EXPLAIN ANALYZE to reveal stark differences between two queries that otherwise return the same results.

Further Reading

- PostgreSQL documentation on EXPLAIN: https://www.postgresql.org/docs/9.4/static/using-explain.html

- Use the Index, Luke — a great, free guide to database performance: http://use-the-index-luke.com/

- From above, a dictionary of PostgreSQL EXPLAIN outputs: http://use-the-index-luke.com/sql/explain-plan/postgresql/operations (other database available as well)